AT A GLANCE

Jaro-Winkler and Levenshtein are string matching algorithms used for fuzzy matching in AML screening, sanctions monitoring, and name verification. Levenshtein distance calculates the minimum number of single-character edits (insertions, deletions, substitutions) needed to transform one string into another making it ideal for longer strings like company names and addresses where every character matters equally. Jaro-Winkler similarity emphasizes matching at the beginning of strings and handles common typos efficiently, making it superior for screening individual names with transliteration variations (like Arabic or Cyrillic names). Regulators like FinCEN don't mandate specific algorithms but require systems that effectively handle variations, aliases, and typographical errors. The best choice depends on your screening context: use Jaro-Winkler for fast, real-time individual name screening, and Levenshtein for precise matching of complex business entities and longer strings.

What Is Levenshtein Distance?

Levenshtein distance is a string similarity metric that calculates the minimum number of single-character edits required to transform one string into another. Named after Soviet mathematician Vladimir Levenshtein who introduced it in 1965, this algorithm counts three types of operations: insertions (adding a character), deletions (removing a character), and substitutions (replacing one character with another).

For example, transforming "Mohammad" into "Muhammad" requires two substitutions, giving a Levenshtein distance of 2. Transforming "kitten" to "sitting" requires three operations: substitute 'k' with 's', substitute 'e' with 'i', and insert 'g' at the end, resulting in a distance of 3.

The algorithm treats all character positions equally; an error at the beginning of a string carries the same weight as an error at the end. This makes Levenshtein particularly effective for comparing longer strings where accuracy throughout the entire string matters, such as company names, addresses, or detailed merchant descriptions.

In AML screening contexts, Levenshtein distance helps identify entities that may be attempting to evade detection through minor variations in their registered names, supporting a more effective AML compliance solution. A shell company named "Global Trading Solutions Ltd" might appear in sanctions lists as "Global Trading Solution Ltd" (missing the 's') , a single-character deletion that Levenshtein efficiently detects.

How Does Levenshtein Distance Work?

Levenshtein distance works by building a matrix that calculates the minimum edit operations needed at each step, using dynamic programming to find the optimal solution efficiently.

The algorithm compares two strings character by character, building a matrix where each cell represents the minimum edits needed to transform a substring of the first string into a substring of the second string. For each cell, it calculates the minimum of: the cell above plus 1 (insertion), the cell to the left plus 1 (deletion), or the diagonal cell plus 0 if characters match or plus 1 if they don't (substitution). The bottom-right cell contains the final Levenshtein distance.

For "Smith" and "Smyth", the distance is only 1 (substitute 'i' with 'y'). For completely different words like "CAT" and "DOG", the distance is 3 because you need to substitute all three characters.

The standard Levenshtein algorithm has a time complexity of O(m×n) where m and n are the lengths of the two strings. For a 10-character string compared against a 10-character string, this requires 100 operations. While this is efficient for individual comparisons, screening thousands of names against watchlist screening with thousands of entries requires optimization techniques like early termination when distances exceed thresholds.

What Is Jaro-Winkler Similarity?

Jaro-Winkler similarity is a string matching algorithm that calculates how similar two strings are, producing a score between 0 (completely different) and 1 (identical). Developed by Matthew Jaro in 1989 and refined by William Winkler in 1990, this algorithm emphasizes matching characters at the beginning of strings, making it particularly effective for names where first characters are less likely to contain errors.

The algorithm works in two stages: first calculating the base Jaro similarity, then applying a Winkler modification that boosts the score for strings that match from the beginning. This prefix-weighting reflects real-world patterns where people are more careful with initial letters of names and where transliteration errors more commonly occur in middle or end portions.

For example, "Jon Smith" and "John Smith" produce a Jaro-Winkler similarity score of approximately 0.96 (very high similarity) because they differ by only one character insertion. "Mohammad" and "Muhammad" also score highly at around 0.93, demonstrating the algorithm's effectiveness with transliteration variations common in Arabic names.

In AML screening, Jaro-Winkler excels at catching common name variations that appear on sanctions lists. A person might be listed as "Mohammed," "Mohammad," "Muhammed," or "Mohamed" all legitimate transliterations of the same Arabic name. Jaro-Winkler's design specifically handles these variations more effectively than algorithms that don't weight prefix matches.

How Does Jaro-Winkler Similarity Work?

Jaro-Winkler similarity works by first calculating the Jaro similarity score, then applying a prefix adjustment that rewards string matching at the beginning.

The base Jaro similarity formula is: Jaro = (m/|s1| + m/|s2| + (m-t)/m) / 3

Where m equals the number of matching characters, |s1| and |s2| are the lengths of the two strings, and it equals the number of transpositions (matching characters in wrong order). Two characters are considered "matching" if they're the same and within max(|s1|, |s2|)/2 - 1 characters of each other.

The Winkler modification adds a prefix bonus: Jaro-Winkler = Jaro + (L × P × (1 - Jaro))

Where L is the length of the common prefix at the start (up to 4 characters) and P is the prefix scaling factor (standard value is 0.1).

For example, comparing "MARTHA" and "MARTHA" with a common prefix of 3 characters (MAR) produces a base Jaro score of 0.889, then the Winkler modification adds 0.033 for a final score of 0.922. This boost rewards the strong beginning match, reflecting how name matching in practice benefits from prefix accuracy.

People are more consistent with the beginning of names. When transcribing or transliterating names, the first few characters typically have higher accuracy while variations accumulate toward the end. A Russian name "Владимир" might be transliterated as "Vladimir," "Wladimir," or "Vladimyr" all sharing the initial "Vla" or "Wla" but varying in the middle and end.

What's the Difference Between Jaro-Winkler and Levenshtein?

The fundamental difference lies in what they measure and how they weigh character positions. Levenshtein counts edit operations needed for transformation, while Jaro-Winkler calculates similarity as a percentage. Levenshtein treats all positions equally; Jaro-Winkler emphasizes prefix matches.

Levenshtein produces a distance (integer count of edits), where lower is better. A distance of 0 means identical strings, while higher numbers indicate greater difference. Jaro-Winkler produces a similarity score (decimal between 0 and 1), where higher is better. A score of 1.0 means identical strings, while scores approaching 0 indicate very different strings.

Consider comparing "Thompson" with "Thomson" (one character difference at position 6): Levenshtein distance is 1, while Jaro-Winkler similarity is approximately 0.96. Now compare "Thompson" with "Shompson" (one character difference at position 1): Levenshtein distance is still 1, but Jaro-Winkler similarity drops to approximately 0.92. Levenshtein assigns both scenarios the same distance, while Jaro-Winkler distinguishes them.

Levenshtein has O(m×n) time complexity, where m and n are string lengths. Jaro-Winkler has O(m+n) time complexity, making it computationally faster for longer strings, though the difference is negligible for typical name lengths (10-30 characters).

When Should You Use Levenshtein Distance for AML Screening?

Use Levenshtein distance when screening longer strings where precision throughout the entire string matters and when character differences at any position carry equal significance.

Company and Business Name Matching: Corporate entities often have complex, multi-word names where changes anywhere in the string indicate potential evasion. "ABC Trading Solutions Limited" vs. "ABC Trading Solution Limited" (missing 's') are variations Levenshtein handles well. Corporate banks, correspondent banks, and B2B fintech services benefit most from Levenshtein's even-handed character treatment.

Address Verification: Addresses contain structured information where accuracy throughout matters: street numbers, street names, unit numbers, cities, postal codes. A single digit error in a street number (123 Main Street vs. 128 Main Street) represents a completely different location.

Merchant Onboarding Processes: Payment processors screening merchant names often deal with business entities that include descriptive terms: "Joe's Pizza & Pasta Restaurant LLC." These longer strings with punctuation, legal suffixes, and descriptive terms benefit from Levenshtein's systematic character-by-character comparison.

Financial institutions handling these scenarios should prioritize Levenshtein: corporate and institutional banking divisions, trade finance operations, correspondent banking relationships, payment processors, onboarding merchants, and commercial lending operations.

When Should You Use Jaro-Winkler Similarity for AML Screening?

Use Jaro-Winkler when screening individual names with common spelling variations, handling transliteration differences, and when processing speed for short strings matters.

Individual Customer Name Screening: Retail customers typically have names with 10-30 characters where the beginning is most reliable. "Muhammad," "Mohammad," "Mohammed," and "Mohamed" all share the strong prefix "Moh" or "Muh" that Jaro-Winkler leverages. Retail banks, consumer fintechs, and digital wallets processing thousands of individual customers benefit from Jaro-Winkler's efficiency.

Transliteration Variations: Names from Arabic, Cyrillic, Chinese, or other non-Latin alphabets have multiple valid English transliterations. A Russian name "Владимир Путин" appears as "Vladimir Putin," "Wladimir Putin," or "Volodymyr Putin" depending on transliteration system. Jaro-Winkler handles these prefix-consistent variations better than strict edit distance algorithms.

Common Typos and Misspellings: Data entry errors often involve single character mistakes, transpositions (letters swapped), or omissions. "Jon" vs. "John," "Cathy" vs. "Kathy," or "Steven" vs. "Stephen" are variations Jaro-Winkler efficiently identifies with high similarity scores.

High-Volume Real-Time Screening: Payment processors handling thousands of transactions per second need fast algorithmic execution. Jaro-Winkler's O(m+n) complexity provides speed advantages when screening large volumes.

Financial institutions handling these scenarios should prioritize Jaro-Winkler: retail and consumer banking, payment processors and remittance services, digital wallets and mobile payment apps, neobanks with primarily individual customers, and money service businesses.

How Do These Algorithms Apply to AML Screening and Compliance?

Both algorithms play critical roles in sanctions screening, PEP (politically exposed person) identification, and adverse media monitoring the three pillars of AML name screening.

Financial institutions must screen customers and transactions against sanctions lists maintained by OFAC (Office of Foreign Assets Control), UN, EU, and other regulatory bodies. These lists contain names with multiple spellings, aliases, transliterations, and known variations. A single sanctioned individual might appear with 5-10 name variations. "Qasem Soleimani" appears as "Qassem Soleimani," "Qasim Soleimani," "Kassem Soleimani," and other variants.

The choice between Jaro-Winkler and Levenshtein affects your match rates and false positive rates. Jaro-Winkler typically produces fewer false positives for name screening because its prefix weighting reduces spurious matches, while Levenshtein provides more comprehensive coverage for business entity screening.

Regulators like FinCEN, FCA, and MAS don't mandate specific algorithms. What they require is demonstrable effectiveness: your system must catch variations, handle typos, identify aliases, and minimize false negatives while keeping false positives manageable. During regulatory examinations, you must justify your algorithm choice based on your customer profile and risk assessment.

Documentation matters. Effective AML compliance requires a program that includes written justification for algorithmic choices, documented testing results showing performance against sample watchlists, false positive rate analysis, and periodic reviews confirming the algorithm remains appropriate over time.

What Are the Pros and Cons of Each Algorithm?

Understanding the strengths and limitations of each algorithm helps you make informed implementation decisions.

Levenshtein Advantages and Disadvantages

Advantages: Precision across the entire string with every character receiving equal consideration. Intuitive edit count metric that directly represents the number of changes needed. Threshold setting clarity with absolute thresholds that remain consistent. Well-studied and standardized with predictable behavior.

Disadvantages: Computational complexity of O(m×n) becomes expensive for very long strings or massive volumes. No position weighting can be a disadvantage for name matching where prefix accuracy matters more. Threshold sensitivity requires extensive testing to find optimal values.

Jaro-Winkler Advantages and Disadvantages

Advantages: Optimized for name matching with prefix weighting that aligns with real-world variations. Faster performance with O(m+n) complexity. Normalized score range makes threshold setting intuitive. Handles transpositions well with adjacent character swaps receiving lighter penalties.

Disadvantages: Less effective for long strings where prefix weighting becomes insignificant. Prefix assumption limitations when prefix accuracy isn't higher than mid-string accuracy. Less intuitive for non-technical users requiring more interpretation. Parameter sensitivity with somewhat arbitrary standard values.

Frequently Asked Questions

What is Jaro-Winkler similarity and how is it calculated?

Jaro-Winkler similarity is a string matching algorithm that produces a score between 0 and 1 indicating how similar two strings are, with extra weight given to matching prefixes. It's calculated by first computing the base Jaro similarity, then applying a Winkler modification that adds a bonus based on common prefix length up to 4 characters.

What is Levenshtein distance used for in AML?

Levenshtein distance in AML is primarily used for matching company names, business entities, addresses, and detailed merchant descriptions during sanctions screening. It calculates the minimum number of character edits needed to transform one string into another, treating all character positions equally.

Which algorithm is faster for name matching?

Jaro-Winkler is typically faster with O(m+n) time complexity compared to Levenshtein's O(m×n). For typical name lengths both execute in milliseconds, but at high volumes (thousands of comparisons per second), Jaro-Winkler's efficiency advantage becomes significant.

Can you use both algorithms together in AML screening?

Yes, sophisticated AML screening systems often use hybrid approaches combining both algorithms. You might apply Jaro-Winkler for initial fast screening of individual names, then use Levenshtein for secondary verification of company entities, maximizing detection while managing false positive rates.

What is a good similarity threshold for Jaro-Winkler in name screening?

Most AML screening systems use Jaro-Winkler thresholds between 0.80 and 0.90 for name matching. A threshold of 0.85 is common, meaning strings must be 85% similar to trigger alerts. The optimal threshold depends on your risk appetite and customer profile.

What is the Damerau-Levenshtein distance?

Damerau-Levenshtein distance extends standard Levenshtein by adding transpositions (swapping adjacent characters) as a fourth edit operation. This makes it more effective for catching common typing errors where adjacent letters are reversed, like "receive" vs "receive."

How do regulators view algorithm selection for AML screening?

Regulators don't mandate specific algorithms but require that your screening system effectively handles name variations, typos, aliases, and transliterations. You must demonstrate that your algorithm choice is appropriate for your customer base with testing results and periodic reviews.

How do you implement Levenshtein distance in Python?

Python offers multiple Levenshtein implementations: the python-Levenshtein library (fast C implementation), the rapidfuzz library (modern, highly optimized), and simple custom implementations. For production AML systems, use optimized libraries like rapidfuzz that provide fast batch processing.

Implementing String Matching Algorithms: Practical Strategies

Tip 1: Test Algorithms Against Your Actual Data Take a sample of 1,000 customer names and 100 sanctions list names, then test both algorithms measuring true positives, false positives, and false negatives. Your data's characteristics determine which algorithm performs better.

Tip 2: Normalize Strings Before Comparison Always preprocess strings by converting to lowercase, removing special characters and punctuation, handling multiple spaces, and stripping common business suffixes. "ABC Trading Co., Ltd" and "abc trading co" should be normalized before comparison.

Tip 3: Combine Algorithmic Matching with Contextual Filters Use metadata to reduce false positives: match date of birth alongside name, verify country of origin, check gender if available, and compare business type. Two people with similar names but different birth dates are likely different individuals.

Tip 4: Implement Tiered Screening for Efficiency Use fast algorithms like Jaro-Winkler for initial high-volume screening, then apply more expensive algorithms or manual review to results that pass the first tier, balancing thoroughness with processing speed.

Tip 5: Document Threshold Selection Rationale Maintain written documentation explaining why you chose specific similarity thresholds, including testing methodology, false positive and false negative rates, and risk assessment justifying the selected balance.

Tip 6: Build Feedback Loops for Continuous Improvement Track all screening decisions (confirmed matches, dismissed false positives, and missed matches discovered later). Use this data to continuously refine thresholds, adjust parameters, and evaluate whether algorithm changes are needed.

Tip 7: Use Phonetic Algorithms as Supplementary Checks Combine edit distance or similarity algorithms with phonetic matching (Soundex, Metaphone) as a secondary verification layer. Names that sound similar but spell differently benefit from phonetic algorithms.

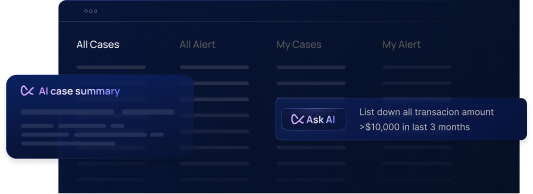

Tip 8: Leverage Modern AML Platforms with Configurable Algorithms Rather than building custom screening systems, use specialized AML platforms like Flagright that offer algorithm selection, configurable thresholds, AI-driven false positive reduction, and complete audit trails.

Choosing between Jaro-Winkler and Levenshtein for AML screening isn't about finding a universally superior algorithm, it's about matching the algorithm's strengths to your specific screening requirements. Jaro-Winkler excels at individual name matching with its prefix weighting and computational efficiency, making it ideal for retail banking and payment processing. Levenshtein provides precision across entire strings, making it essential for corporate banking and business entity screening. The most sophisticated AML programs implement hybrid approaches that apply the right tool to each screening context. Ultimately, the best compliance outcomes result from combining these algorithmic choices with intelligent AI-driven false positive handling; exactly the approach offered by Flagright. Request a personalized demo to see how this approach supports accurate, scalable compliance workflows.

.svg)

.webp)