Imagine your organization has found a promising AI agent to help handle AML alerts. It promises to triage cases faster, reduce false positives, and even write draft SAR narratives. Exciting, right? But before you let this AI loose in your compliance program, you face a pivotal checkpoint: User Acceptance Testing (UAT). UAT is the moment of truth where your team puts the AI through its paces using real-world scenarios to ensure it actually works as advertised, and to catch any issues early. In short, a great UAT can spell confidence and success, while a poor or rushed UAT can spell disaster. In this piece, we’ll explore how to structure, run, and validate a successful AML UAT when evaluating AI-driven alert handling, and how to “fail fast” if needed (better to fail in testing than in production!). We’ll cover dataset design, scenario matrices, adjudication standards, pass/fail criteria, and documentation practices. We’ll also tie UAT to regulatory expectations (think OCC 2011-12 and SR 11-7 on model risk management), audit preparedness, and model governance, including human-in-the-loop controls and traceability. By the end, you should know what to test, how long it might take, who to involve, and what “good” looks like.

The Critical Role of UAT in AML AI Deployments

When introducing an AI agent into a BSA/AML compliance workflow, the stakes are high. You’re operating in a regulated, high-risk domain, if the AI misses a true suspicious activity or makes inconsistent decisions, your financial institution (FI) could face regulatory scrutiny or even allow illicit activity to slip through. Regulators and auditors won’t accept “the AI told me so” as an excuse; they expect you to prove that your systems (even AI-driven ones) are effective and under control. This is where UAT comes in. UAT is more than just a routine checkbox before go-live; it’s also your safety net and proving ground. It’s the phase where the people who will actually use the system (your AML compliance analysts, investigators, etc.) validate that the AI meets their needs and expectations in a realistic setting.

A well-structured AML UAT gives everyone; compliance officers, risk managers, IT, and even regulators, confidence that the AI does what it’s supposed to do (and that you have evidence to back that up). It’s far better to discover any shortcomings now, in a controlled test, than later when SAR deadlines or examiners are on the line. In the best case, UAT builds trust in the AI; in the worst case, UAT allows you to “fail fast”, i.e. identify a deal-breaking issue early and either fix it or decide not to proceed with deployment. In both cases, UAT protects you.

Regulators have implicitly set the expectation that FIs will thoroughly test and validate any models or AI tools before use. In fact, the OCC’s model risk management guidance (OCC 2011-12, echoed by the Federal Reserve’s SR 11-7) explicitly states that FIs are expected to validate their own use of vendor models. In other words, even if an AI solution comes from a third-party vendor with great credentials, you, the FI, must verify it works as intended in your environment. UAT is a key part of that verification. Moreover, regulators care about outcomes and controls more than the fancy technology: if you deploy an AI to handle alerts, you’ll need to demonstrate explainability, oversight, and documentation around it. A rigorous UAT process helps you generate that documentation and set up those oversight mechanisms from day one.

Planning a Successful AML UAT: Dataset and Scenario Design

Start with a plan. A great UAT doesn’t happen ad-hoc; it is carefully planned with clear goals, scenarios, and success criteria. Here’s how to structure the planning:

1. Define Objectives and Scope:

What exactly are you testing? For an AML alert-handling AI, UAT might aim to verify the AI’s alert disposition decisions (e.g. does it correctly clear vs. escalate alerts?), the quality of its explanations or narratives, its integration into your case management workflow, and its performance on various typologies (fraud, money laundering patterns, sanctions hits, etc.). Define the scope up front, are you testing on historical alerts, a live parallel run, or a mix? Are you focusing on transaction monitoring alerts, KYC reviews, or other tasks? Nail this down so everyone knows the boundaries.

2. Assemble a Representative Dataset:

The power of UAT comes from using data and scenarios that mirror your production environment. Plan to use historical alerts and cases from your FI that have known outcomes. For example, you might curate a set of past alerts including a mix of true positives (cases that were escalated and perhaps resulted in SARs) and false positives (alerts that were cleared as not suspicious). Make sure to cover the diversity of your business: include different products, customer types, geographies, and typologies. If your FI deals with correspondent banking, crypto transactions, trade finance, etc., include examples of each. The dataset should be large enough to be statistically relevant but not so huge that the UAT becomes unmanageable, often a few hundred alerts or a couple of months of data is used, depending on your alert volumes. Use data that is representative of production conditions (volume, complexity, noise) so that test results will generalize.

3. Design Test Scenarios and a Scenario Matrix:

Beyond just feeding historical alerts, design specific test scenarios that the AI should handle. Think of scenario design as creating a matrix of test cases to exercise various aspects of the AI:Document these scenarios in a test scenario matrix, which is essentially a structured list of test cases with their inputs and expected outcomes. A UAT document often serves as a “definitive validation framework” covering a scenario matrix mapped to requirements. For each scenario/test case, write down the expected behavior of the AI (e.g. “should recommend escalation”, “should clear the alert”, “should produce a rationale mentioning X, Y, Z”). This gives you a basis to later judge pass or fail for each scenario.

- Include scenarios for each major typology your AML program covers. For example, a structuring/money laundering scenario (smurfing of deposits), a human trafficking ring pattern, an OFAC/sanctions screening alert, an elder financial abuse case, etc. You want to see how the AI’s logic deals with each kind of risk.

- Include edge cases and challenging situations. For instance, an alert with very limited data, or one with conflicting indicators, or a very high-risk profile that should always escalate. If the AI has a machine learning component, include a scenario with data outside its usual training distribution to see how it copes.

- Include normal/benign scenarios too. A great UAT tests not only whether the AI catches bad actors, but also whether it avoids false positives. For example, test a scenario of a business-as-usual high-volume customer who triggers an alert (which historically was deemed a false positive); does the AI appropriately clear it?

- If the AI provides narrative justifications or SAR write-ups, include a scenario to test the quality of its written explanation (e.g. a complex case where a good narrative is non-trivial).

4. Adjudication Standards (Ground Truth):

For each test alert or scenario, you need a ground truth or expected result to compare against. This typically comes from your seasoned investigators or from historical decisions. Essentially, you must decide upfront what the “correct” action or outcome is for each test case. During UAT, the AI’s output will be adjudicated against this expected outcome:It’s crucial to set clear criteria for what counts as a pass. For example, you might say an alert is considered correctly handled if the AI’s recommendation (clear or escalate) matches the human expectation and any explanatory notes meet your quality standard (e.g. factual, complete). Define who will do this adjudication, usually your compliance analysts or QA team will review each AI decision during UAT and mark it as correct or not. Ensure they apply consistent standards (perhaps have two people review or use a rubric for complex cases).

- If the AI’s decision matches the expected decision, and the rationale is acceptable, that test case is a pass.

- If it doesn’t, that’s a fail (with degrees, e.g. a minor discrepancy vs. a critical miss).

5. Pass/Fail Criteria:

UAT isn’t complete until you decide if the system overall “passed” or “failed”. This means translating the individual test case results into an overall judgment. Before you even begin testing, set quantitative success criteria if possible. For instance, you might require that 95% of test alerts were handled correctly (or 100% of critical high-risk scenarios passed), or that false positives were reduced by X% without missing any true positives, etc. Also include qualitative criteria: for example, user acceptance; do the analysts find the AI’s output usable and trustworthy?, is an important factor. Additionally, you may set performance criteria like the AI must process alerts within a certain time, or integration criteria like it must log each decision in the case management system. All these become part of the UAT exit criteria. By defining the bar for success up front, you create the option to confidently say “UAT passed, we can proceed” or “UAT failed to meet our bar” when the time comes. It’s better to have a firm yardstick than to endlessly debate results later. As part of go-live readiness, stakeholders should mutually agree on the success criteria and sign off that those have been met.

6. Documentation:

Document everything from the start. A UAT test plan document should list the test scenarios, data to be used, roles and responsibilities, schedule, and the success criteria. During execution, keep a log of test results (pass/fail per scenario, any issues found, screenshots or evidence of outputs, etc.). This documentation becomes evidence for auditors/regulators that you did your due diligence. It also helps in internal discussions if someone asks “Did we test scenario X?” you have it recorded. In fact, the UAT document and results should be retained as part of your model governance library (it demonstrates compliance with model validation expectations under OCC 2011-12/SR 11-7). A comprehensive UAT package will include the test scenario matrix and results, any defect/issue list and how those were resolved, and formal sign-off from the responsible stakeholders that the system is ready for production.

By painstakingly planning your UAT in this manner, you set the stage for a thorough evaluation. You essentially create a microcosm of your production environment and challenges, where the AI must prove itself on paper before it’s trusted with live decisions.

Execution: Running UAT Successfully (and Knowing When to Fail Fast)

Planning is only half the battle, now you must execute the UAT. Here’s how to run the UAT phase in a controlled and effective way:

1. Setup and Preparation:

Before testing begins, ensure the UAT environment is ready. This means the AI system is deployed in a test environment or sandbox that mimics the production (with all necessary integrations to data sources, case management systems, etc. in place). Often, the IT team or the vendor’s engineers will set up a dedicated UAT instance. Load the test dataset into this system. Also, very importantly, make sure the users (analysts/testers) are trained on how to use the AI tool. UAT is not just testing the AI, it’s testing the end-to-end process including the humans in the loop. A quick training or orientation for the people who will execute UAT on how to input cases, interpret the AI outputs, and record outcomes is essential so that UAT isn’t derailed by user confusion. This preparation phase could include a kickoff meeting where you walk through the UAT plan with all participants.

2. Who’s Involved: UA

T is inherently a cross-functional exercise. Key players typically include:Having all these stakeholders engaged ensures that UAT results are viewed from all angles (user experience, regulatory compliance, technical fit, etc.). It also means that if something’s not right, the people who can fix it are “in the room” to act quickly.

- Compliance/AML Analysts and BSA Officer: They are the end users whose acceptance matters most. Experienced analysts will execute the test cases, review the AI’s decisions, and provide feedback on whether those decisions were correct and whether they trust the outcomes. The BSA Officer or compliance manager oversees this to ensure it meets regulatory and policy standards.

- Product or Business Owner: If a product manager or business leader is championing the AI project, they should observe results and ensure the solution aligns with business goals (e.g. efficiency gains).

- Risk/Model Validation Team: In many FIs, a model risk or validation team (could be part of risk management or internal audit) will want to review the testing process. They might not execute tests, but they may set requirements for testing (to satisfy model governance) and will review the evidence. It’s wise to involve them early so the UAT covers what they need (e.g. specific challenger tests or additional validation checks).

- IT/Integration Team: They ensure the technical side is smooth, that data flows correctly, any technical issues encountered during testing are resolved (e.g. if an API connection fails), and that the environment is stable.

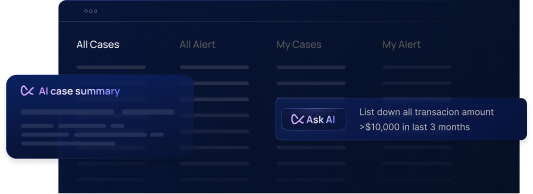

- Vendor Support (Customer Success and Engineers): If this is a third-party AI solution, the vendor will usually provide support during UAT. For example, Flagright assigns a dedicated customer success manager and a solutions engineer during UAT to help coordinate and quickly resolve issues. The vendor’s team may help interpret unexpected AI behavior, apply minor configuration tweaks, or even retrain a model if something is significantly off, all to ensure a successful outcome. They’ll also be taking notes on any feature requests or enhancements the client suggests during UAT.

- Procurement/Project Manager (if applicable): If this UAT is part of a formal vendor evaluation or proof-of-concept, someone from procurement or project management might oversee that the test is executed within agreed timeline and that success criteria align with the contract/SLA promises.

3. Executing the Tests:

Now the real action, executing the scenario matrix. This should be done systematically. One common approach: have testers go through test cases one by one, inputting the alert or case into the AI system (or if it’s batch processing, run a batch of alerts through). For each, observe the AI’s output (what decision did it make? what score or rationale did it give?). The tester then compares it to the expected outcome:It’s good practice to log these results in real-time (e.g. in a spreadsheet or test management tool). Also log any issues/bugs encountered (e.g. “system threw an error on alert #10” or “the workflow screen is confusing for the user”). During UAT, maintain an issue tracker where each issue is categorized by severity. Some issues might be quick fixes (a label in the UI), others might be more serious (the model consistently misclassifies a certain typology).Real-time issue resolution is ideal. If the vendor or IT can fix something on the fly (say a configuration setting or a minor code tweak) and it’s not disruptive, do it and re-run that test. For instance, if you find that a threshold or rule can be adjusted to catch a missed scenario, the vendor might adjust it and you retest the scenario within UAT. This iterative approach prevents delays. Flagright, for example, emphasizes systematic scenario testing with real-time issue resolution, followed by a resolution phase to re-test any fixes.

- If it matches expectation, mark that scenario pass.

- If not, mark fail and document what was wrong. For example: “Alert #45 (expected escalate); AI cleared it incorrectly.” Or “The AI escalated as expected, but the explanation missed key risk factors we’d require.”

4. Duration and Pace:

How long should UAT execution take? This depends on scope, but typically 1-3 weeks is common for an AML system UAT. In a fast-track deployment, it may be as short as one week of intensive testing if the integration is simple and data set is limited. In more complex cases, UAT can stretch to a few weeks especially if issues are found that need fixing and regression testing. In the more complex case, the timeline could be an 11-week implementation from contract to go-live, with about 2 weeks dedicated to UAT in the middle. Indeed, leading practices treat UAT as an essential gate: Flagright’s deployment methodology, for instance, has UAT in weeks 4-6, and go-live will not proceed until UAT is fully completed and passed. So, plan for a dedicated block of time. During this period, it’s wise to clear some of the day-to-day workload from your testing analysts if possible so they can focus on UAT without distraction.

While you don’t want to rush UAT, you also want to avoid it dragging on endlessly. This is where the “fail fast” mindset comes in. If you’re halfway through your test cases and it’s already evident that the AI is not meeting key criteria, surface that observation immediately to stakeholders. For example, if out of the first 50 test alerts the AI has made several critical mistakes with no obvious fix, call a checkpoint meeting. It might be that the team decides to pause and let the vendor refine the model before continuing, or even decides this solution might not be ready. Failing fast means recognizing a potential failure early, stopping or pivoting, rather than running the full test only to conclude failure at the end. It saves time and resources.

5. Communication and Monitoring:

Throughout UAT, keep communication channels open. Daily stand-up calls or UAT status meetings are very helpful. They allow the team to discuss what passed/failed that day, what needs attention, and to recalibrate the plan if needed (maybe add a test case if someone thought of a new edge scenario, or adjust the schedule if things are going slower/faster). Transparency is key, no one should hide failures or issues; bring them up so they can be addressed. The goal is not to “prove the AI is perfect”, the goal is to find the truth. If something’s wrong, everyone benefits from knowing it early.

6. Resolution and Re-Test:

Once the initial round of tests is done, you likely have a list of failed cases or issues. Now comes the resolution phase. Prioritize the issues:Work with the vendor/IT on a plan to address the critical issues. This could mean tweaking some rules or thresholds, providing the AI additional training data on a typology it struggled with, fixing integration bugs, etc. Once fixes are in place, re-test those scenarios. UAT isn’t done until you’ve confirmed that all critical issues are resolved or mitigated. It’s common to do a short second cycle of UAT focusing on the previously failed cases after fixes. If new issues appear, handle those accordingly. This may add a bit more time, but it’s worth it to ensure confidence.

- Which ones are critical must-fix-before-go-live? (e.g. “AI misses structuring cases; unacceptable, needs model retraining” or “Integration fails to log decisions; must fix”).

- Which are minor or can be worked around? (e.g. “UI typo” or “AI’s narrative could be slightly improved; nice to have.”).

7. User Acceptance & Sign-off:

Assuming the fixes work and the pass criteria are now met, it’s time to formalize the “acceptance” in User Acceptance Testing. Typically, the testing team will compile a UAT summary report: listing all scenarios, results, any deviations and resolutions, and stating whether each success criterion was achieved. The relevant stakeholders (head of compliance/BSA Officer, head of IT or project sponsor, and often the model risk manager) will review and then sign off that they are satisfied. This sign-off is a major go/no-go checkpoint. If all is well, it’s a “go” for production. If not, either UAT is extended or it’s a “no-go”, which could mean going back to the vendor for improvements or even canceling the deployment if the issues are too fundamental. Remember, a no-go after UAT is not a failure of you, it’s a success of the process. It means the process did its job by preventing a bad deployment. The concept of failing fast is that it’s better to have a project not proceed, than to push it to production and have a failure in the live environment with real money and compliance risk at stake.

In summary, executing UAT is an exercise in thoroughness, collaboration, and honesty. It’s about making sure everyone is satisfied that “yes, this AI works for us” and if not, having the courage to pause or pivot. By the end of UAT, the goal is to have zero surprise when you flip the switch in production.

UAT as a Compliance and Governance Requirement (Regulatory Expectations)

One might ask: beyond internal confidence, why all this rigor? The answer: regulatory expectations. In the world of AML, regulators expect FIs to implement technology responsibly and be able to demonstrate that any automated system is reliable, controlled, and well-understood by the institution. UAT is a key piece of meeting those expectations, fitting into the broader model governance framework.

Model Risk Management (MRM) Guidelines: The OCC Bulletin 2011-12 and Federal Reserve SR 11-7 guidance on model risk management make it clear that FIs must manage the risk of models through robust validation and oversight. They define a model broadly (any algorithm or system that uses data to produce an estimate or decision). Your AI alert-handling agent certainly qualifies as a model. These guidelines insist on:

- Testing and Validation: Before deployment, the model must be tested to ensure it’s working as intended. This includes out-of-sample testing, stress testing, and benchmarking as appropriate. For an AML AI, UAT essentially acts as an initial validation test, using data representative of the production environment to confirm performance. Regulators don’t want you to just trust the vendor’s promises or a one-time demo; they want to see evidence that you tested it yourself under your conditions.

- Independent Review: MRM guidelines call for an independent review of models. This often means your internal model validation or audit team should review the UAT results. They might even repeat certain tests or analyze the output themselves. This independent challenge function makes sure that the model isn’t a black box running wild. So, expect that your UAT documentation and results will be scrutinized by internal audit and potentially examiners. If you can show a methodical UAT with solid results, it goes a long way in an examination to satisfying the question: “How do you know this AI is working correctly and not posing undue risk? Show us.”.

- Vendor Models; FI’s Responsibility: Critically, the guidance states that using a third-party model does not absolve the FI of responsibility. A direct quote: “Banks are expected to validate their own use of vendor products.”. Furthermore, vendors “should provide appropriate testing results that show their product works as expected”, but the FI must verify and document that, and also document any customization or configuration done. In practice, this means you should obtain from the vendor any developmental evidence of model performance, but then replicate or test key aspects yourself during UAT. If the vendor’s AI comes with certain claims (e.g. “90% accuracy at alert triage”), your UAT should measure and see if those hold true with your data. Regulators will ask for that kind of verification. And if the model was adjusted or tuned for your FI (which it likely is, via risk scoring thresholds or training on your historical alerts), document those adjustments and justify them as part of the UAT report.

- Documentation and Traceability: The phrase “traceability” in regulatory parlance often refers to being able to trace model decisions and data lineage. During UAT, you should evaluate whether the AI system provides audit logs and explanations for its decisions. Does it log, for each alert, who/what decided to clear or escalate it and why? Can you extract a report of all AI decisions and their justifications? Good AI vendors include features like versioned decision logs for reproducibility and plain-language explanations of decisions. These are gold from a regulatory perspective. UAT should confirm that these logging and explainability features work as advertised. For example, you might randomly pick a handful of UAT alerts and pretend you’re an examiner: check that you can pull up a log of the AI’s reasoning and see if it’s intelligible and matches the outcome. If it’s not, that needs addressing. Regulators have said they care less about the fact that AI is used, and more about “explainability, control, and documentation”. So your UAT should exercise the explainability: ensure the AI can produce an audit-trail-friendly explanation. Think of traceability as proving the chain of trust: data came in, AI did X, here’s why, and here’s how a human oversaw it.

- Human-in-the-Loop (HITL) Controls: Current regulatory expectations for AI in compliance strongly favor a “human in the loop” approach, at least initially. This means either the AI is in an advisory role (analyst makes final decision) or if the AI does make final decisions on some alerts, there is a robust oversight process (e.g. periodic human review of a sample of AI-closed alerts, and the ability for humans to override decisions). UAT should thus validate the HITL design. For instance, if your plan is to auto-clear low-risk alerts with AI and only send high-risk ones to humans, you should test that workflow in UAT. Does the AI correctly identify which are low-risk enough to auto-clear? How does it flag them? Do analysts get to easily review AI-cleared alerts after the fact? Maybe in UAT you simulate the embedded quality control process, e.g. have a QA analyst review a random subset of AI decisions to ensure they were correct, mirroring what you’d do in production. This ensures the mechanisms for human oversight actually work. An AI agent should operate with the same or better oversight than a human analyst. UAT is the time to confirm that: for example, test that if an analyst “overrides” an AI decision, is that override captured and does the AI learn from it or at least record it? Many vendors tout continuous learning or feedback loops; UAT can test a simple version of that (tell the AI it was wrong on a case and see what happens, at minimum it should record the feedback). Proper HITL and feedback loop processes give regulators reassurance that the system isn’t unchecked. Your UAT report can then proudly state that, e.g., “We tested the override function and quality control review process; in all cases the system allowed human intervention and logged the changes for audit.”

In essence, think of UAT as the first big exam for your AI, and regulators are peeking over your shoulder. By aligning your UAT with these governance expectations, you not only satisfy your internal standards but also build the evidence portfolio you’ll show to auditors and examiners. A successful UAT, documented well, becomes a pillar of your model governance. It demonstrates that you have controlled introduction of AI into your compliance program, with due caution and verification. This can be hugely beneficial during regulatory exams. Rather than being on the defensive (“uh, we deployed an AI, hope it’s okay…”), you can be on the front foot, providing a binder or folder with your UAT plan and results, showing how you validated the tool, tuned it, and set up oversight. That level of preparedness can turn a potentially tricky conversation (“Are you sure this AI isn’t missing things?”) into a straightforward demonstration of compliance.

Common UAT Questions: What to Test, How Long, Who to Involve, and What “Good” Looks Like

Let’s address some of the common questions and pain points stakeholders often have about UAT in this context:

1. What exactly should we test in an AML AI UAT?

Test enough to cover the waterfront of your risks and requirements. At minimum, test all major alert types and typologies you encounter: if you have transaction monitoring scenarios for structuring, layering, fraud, etc., include each. Test boundary cases (alerts that are just above/below risk thresholds). Test integration points, e.g. does an alert move properly from your monitoring system to the AI to the case management tool? Test user workflows, can an analyst effectively review and accept/override the AI recommendation in the interface provided? Test the AI’s output quality, not just the decisions, but also any narratives or risk score explanations it produces. And importantly, test the operational processes around the AI: for example, if you expect analysts to only handle what the AI escalates, simulate a day’s worth of alerts to see how workload is distributed and that nothing falls through the cracks. Essentially, test the AI in context, not in isolation. A useful approach is to maintain a traceability matrix mapping your original requirements to test cases, e.g., if one requirement was “The AI must handle retail and commercial banking alerts,” ensure you have test cases for both retail and commercial scenarios; if another requirement was “The system must produce an audit log,” have a test case where you retrieve the audit log and check it.

2. How long does UAT typically take (and when should it happen)?

UAT duration can vary, but typically a couple of weeks to a month is normal for an AML system evaluation. The timeline often depends on project urgency and complexity. If the integration is cloud-based and relatively straightforward, you might execute UAT in 2 weeks. If on-premise or with lots of data prep, it might take 4-6 weeks including iterations. It’s helpful to schedule UAT after initial configuration and internal testing but before full production go-live. For example, in an 11-week deployment project plan, UAT might occupy weeks 5-6, with go-live in week 7 if UAT is successful. Some firms allow more time, e.g. a pilot phase that could run a couple of months, but a formal UAT as the final checkpoint is shorter and more concentrated. In any case, plan your UAT window and communicate it to all stakeholders so they allocate time. Don’t try to squeeze UAT into just a day or two; that rarely yields confidence. And if you’re worried about UAT extending the project timeline, consider the alternative: a rocky go-live and remediation under regulatory pressure will cost far more time. UAT is an investment in quality.

3. Who needs to be involved in UAT (and who “owns” it)?

As described earlier, UAT is a team sport. The Compliance/AML team typically “owns” it in terms of defining success (since it’s their process being validated) and doing the bulk of testing. The vendor or tech team owns providing a working system and supporting fixes. Model risk/audit owns making sure it meets validation standards. IT owns the environment and data. It’s important to have a single point of contact or UAT lead, often a project manager or the compliance project lead, who coordinates all these players. And it’s essential that someone at the executive level (like the BSA Officer or head of compliance) is sponsoring this and is ready to endorse or veto go-live based on UAT outcome. Their involvement ensures that UAT isn’t just a formality, it’s a true acceptance test with teeth. On the flip side, involving front-line analysts gives you the practical insight: after all, if the people who will use the AI daily don’t like it or find it confusing during UAT, that’s a red flag to address (better now than later).

4. What does “good” look like in a UAT?

“Good” UAT is not just one that passes; it’s one that is run thoroughly and gives stakeholders confidence. Here are some signs of a high-quality UAT:

- Comprehensive Coverage: All key scenarios and requirements were tested and passed. There are no major blind spots untested. If an alert type or product was out of scope, that’s acknowledged and a plan exists to handle it (maybe that typology will still be handled by humans or will be added in phase 2).

- High Pass Rate, No Critical Defects: Ideally, the vast majority of test cases passed. A few minor issues might remain (and are documented with owners assigned to fix in future), but anything critical was resolved. For example, if initially the AI struggled with a certain pattern, by end of UAT that was tuned and now works. You might have a metric like “98% of test alerts were handled correctly after fixes” to tout. The few that didn’t could be known acceptable limitations (with compensating controls) or corner cases to improve later.

- User Confidence and Competence: The analysts and investigators who participated are now relatively comfortable with the system. They know how to use it, and they trust its outputs based on what they saw. If you survey them at end of UAT, they’d say things like “the AI mostly made the right calls, and its explanations made sense”; that’s a great sign. On the other hand, if they say “we’re still not sure why it did what it did half the time,” that signals more work needed on explainability or training.

- Documentation & Audit Trail Complete: All results and changes are documented. Importantly, a model documentation package might be updated with what was learned in UAT. For instance, if threshold X was adjusted during UAT, that should be noted in the final model documentation. You might even produce a brief UAT report to file away. If an auditor asked “show me evidence you validated this AI tool,” you have that ready. This includes saving the scenario matrix, test results, sign-off sheet, etc., in a repository.

- Go/No-Go Decision Made with Confidence: At the end, there’s a meeting where results are reviewed, and stakeholders confidently make a go-live decision. A “good” UAT ends with either “Yes, we’re ready to launch and here’s why we believe it will work”, backed by data, or “No, we’re not going live because it didn’t meet the bar, and here’s the evidence why that’s the right call.” In both cases, the key is confidence and clarity. There’s no vague uncertainty. If it’s a go, everyone knows what to watch post-go-live (maybe certain metrics) but they’re optimistic. If it’s a no-go, it’s recognized as the right call (and maybe the plan shifts to extended pilot or trying a different solution).

- Alignment with Regulatory Standards: A subtle but important marker: if your internal risk or audit teams are satisfied. If they give a nod that the UAT was conducted in line with model validation expectations, that’s a strong sign of quality. It means fewer problems later when examiners review it. For example, if UAT included testing with new data the model hadn’t seen (out-of-sample), testing extreme values (stress test), and comparing results to human decisions (benchmarking), you’ve basically hit all the validation notes that regulators love.

In short, “good” UAT results in a clear understanding of the AI’s performance, limitations, and readiness. It should feel like you have illumination, not darkness: you know what the AI will do well, where it might falter, and you have plans for how to manage it going forward.

From UAT to Production: Ensuring Lasting Success (90-Day Plans and Continuous Improvement)

Let’s assume your UAT went well and you’re moving to production, congratulations! But our discussion wouldn’t be complete without touching on what happens next, because the story doesn’t end at go-live. In fact, regulators and best practices view model deployment as a beginning of another phase of monitoring and tuning. Here are some final thoughts on post-UAT success:

- Mandatory UAT Gating: We’ve stressed it already, but it’s worth reiterating as a principle: no UAT, no go-live. This should be enshrined in your project governance. Many failures in fintech/AI deployments happen when deadlines or pressure lead to skipping or shortening UAT. Don’t give in to that. It’s far more costly to recover from a flawed deployment. Top-tier implementation strategies explicitly make UAT completion a hard gate before production. Flagright, for example, follows a zero-compromise approach to go-live readiness where comprehensive UAT completion is mandatory before production deployment. Adopting such a stance internally gives your team the air cover to do things right. If someone tries to push you to go-live without adequate testing, you have policy on your side to say “no, that’s against our process.”

- Go-Live and 90-Day Success Plan: Once in production, plan an early-life support or success period. In the first 90 days, closely monitor the AI’s performance. Many organizations do a “90-day success plan” which involves intensive monitoring, frequent check-ins, and metric tracking to ensure the solution is delivering value and not drifting. You should track metrics such as: alert volumes processed by AI vs human, the AI’s decision accuracy (maybe by doing continued quality sampling of AI decisions), how much faster cases are resolved, and any reduction in backlog or false positives. Also watch for unexpected side effects, are analysts perhaps over-relying on the AI without critical thought? Are there new patterns of alerts where the AI is unsure? Use this period to iron out any kinks. Flagright’s approach, for instance, includes daily monitoring post-launch transitioning to weekly check-ins over the first 90 days. The idea is to catch any issues early (again “fail fast” in micro-scale, if something in production is not working, address it in days, not months).

- Audit Preparedness: All the work you did in UAT feeds into audit readiness. Make sure the final artifacts (UAT report, model documentation, policy for human oversight, etc.) are stored somewhere accessible. Some recommend creating an “Exam binder” or a document repository for the AI model that includes the validation/UAT results, documentation of model assumptions, limitations, and evidence of ongoing monitoring. That way, when audit or regulators come knocking, you aren’t scrambling, you’re presenting. It’s part of moving from being reactive to proactive in compliance management.

- Continuous Model Governance: Even beyond 90 days, treat the AI model like a living thing that needs periodic health checks. Regulators expect ongoing monitoring and annual (or periodic) validations for models. So, consider UAT as the initial validation and be prepared to do follow-up validations, perhaps lighter touch, after 6 months or a year, especially if the model learns or changes. Also, if you plan to update the AI model (maybe a new version from the vendor or re-training on new data), that update should go through a mini-UAT or validation test as well before being put in production. This cycle ensures you maintain control and knowledge of the model’s performance throughout its life.

- Failing Fast Redux: Even in production, the fail-fast mentality is valuable. If at any point the AI’s performance degrades or an unforeseen issue emerges (for example, suddenly it starts misclassifying a new typology), be ready to take swift action, that could mean rolling back to manual processing for that case type or engaging the vendor to retrain immediately. It’s better to temporarily suspend or scale back the AI’s responsibilities than to stubbornly push on and have a major miss. But because you did a great UAT, such surprises should be rare. Still, vigilance is the name of the game in model risk management.

Conclusion

User Acceptance Testing in the context of AML AI solutions is where preparation meets reality. It is your dress rehearsal for the high-stakes play of fighting financial crime with advanced technology. A great UAT is characterized by thorough planning, realistic test scenarios, active involvement of all stakeholders, and clear-eyed criteria for success. It bridges the gap between a vendor’s promises and your institution’s unique needs, ensuring that when the AI is finally set loose on your alerts, it performs with no surprises.

We’ve seen how robust UAT practices not only mitigate operational and compliance risks but also align with regulatory model governance expectations. By documenting your UAT and results, involving human oversight, and insisting on traceability and explainability, you’re essentially ticking the boxes that frameworks like OCC 2011-12 and SR 11-7 have laid out for responsible model use. This will pay dividends when auditors or examiners come calling, you’ll be ready to show that you left no stone unturned before deploying the AI.

Perhaps most importantly, UAT is about learning and adapting fast. If the solution is going to fail, you want it to fail in UAT, fast and safe, not in production, where it’s costly and potentially damaging. A “fail” in UAT isn’t a disaster; it’s a success in preventing a bad outcome later. Conversely, a pass in UAT gives you confidence to move forward and innovate in your compliance operations.

So, what does great AML UAT look like? It looks like a well-orchestrated test marathon that ends either with a victory lap (a confident go-live) or a wise early exit, and either outcome is a win compared to blind risk. It looks like BSA Officers, compliance analysts, risk managers, and IT working in concert, checklist in hand, testing hypothesis after hypothesis, until they are satisfied. It looks like documentation binders filled with evidence, and dashboards showing metrics that went from baseline to better. It looks, ultimately, like a team fully prepared to take an AI partner into the trenches of financial crime compliance, with eyes open.

In short, effective UAT is where you earn the right to trust your AI systems. And if that trust isn’t fully earned, great UAT helps you gain the wisdom to say “not yet” — an equally valuable outcome. By approaching testing with this mindset and integrating reliable financial crime compliance solutions, organizations can ensure both speed and safety, maintaining security while leveraging AI to strengthen their defenses against financial threats.

.svg)