Fraud in payments is growing more sophisticated, forcing payment service providers (PSPs) to rethink their defenses. Today’s fraudsters are even leveraging AI tools to scale attacks and evade detection. In response, regulators and industry leaders are raising the bar for fraud prevention. A 2025 American Banker survey found that 62% of banks and credit unions were making fraud detection an active or near-term priority for intelligent automation. This reflects a clear trend: the era of purely static, rules-based fraud screening is giving way to AI-driven approaches. But rather than viewing artificial intelligence and rule-based logic as opposing strategies, leading experts suggest combining them may yield the best results. This article explores the limitations of traditional rules-based screening versus AI-powered detection, and why a hybrid approach, blending AI with risk-based rules, is emerging as the gold standard for payment processors' compliance programs.

The Limits of Rules-Based Screening

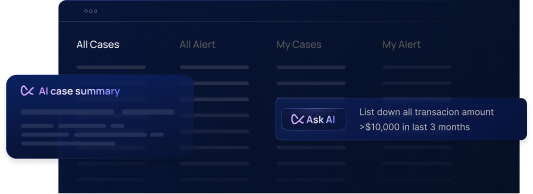

Rules-based fraud screening has been the backbone of transaction monitoring for decades. In a rules-based system, analysts define static if-then scenarios (e.g. “flag transactions over $10,000 from new accounts”) to catch suspicious activity. While this approach is straightforward and transparent, it has serious limitations in today’s fast-changing fraud landscape. Rigid rules can’t easily adapt to novel schemes or subtle fraud patterns, so they tend to cast wide nets that ensnare many legitimate customers. In practice, legacy AML and fraud systems trigger enormous volumes of false alerts, analysts estimate 85-99% of alerts from older rule-based tools are false positives. One industry report noted these legacy platforms rely on hard-coded thresholds that “don’t adapt to evolving criminal tactics,” such that roughly 90% of flagged transactions by such systems are false alarms. This glut of bogus alerts forces compliance teams to waste countless hours investigating benign activity, creating alert fatigue and driving up costs.

Rules-based logic also struggles to keep up with clever fraudsters. Fraud patterns are evolving too fast for static rules to catch up, in a recent Stripe survey of 4,000 payments professionals, three in four leaders said fraud is changing faster than their business can adjust. Every time criminals invent a new scam, analysts must manually create or tweak rules, a reactive process that often lags behind. Such false declines not only frustrate customers but also cost merchants and processors lost revenue. In short, a pure rules-based approach tends to produce high false positives, false negatives for new schemes, and slower response to emerging threats. Clearly, static rules alone are not sufficient to protect modern payment processors in a dynamic risk environment.

The Rise of AI in Fraud Detection

To overcome these gaps, financial institutions are increasingly turning to artificial intelligence and machine learning. AI-powered fraud detection systems can analyze vast datasets in real time and recognize complex patterns or anomalies that static rules might miss. Instead of relying on a predefined checklist of red flags, machine learning models continuously learn from new data, allowing them to identify emerging fraud tactics and adapt as criminals change strategies. The payoff from this adaptability is significant: banks report improved detection rates and fewer false positives when deploying AI models.

For example, at DBS Bank, an AI-driven monitoring system now processes 1.8 million transactions per hour and delivered a 60% improvement in fraud detection accuracy coupled with a 90% reduction in false positive alerts. Such results were nearly impossible to achieve with manual rules alone, which often saw accuracy trade off against alert volume. Early adopters are also seeing major efficiency gains, by one estimate, AI-enabled investigations can be completed 75% faster than before.

Industry-wide data reflects how rapidly AI is being embraced for fraud prevention. In payments, 47% of businesses now use an AI-based tool or feature to detect and prevent fraud, making it the most common application of AI in the payments sector. Banks similarly recognize the value: a Thomson Reuters survey found 71% of financial services firms in 2025 were using AI for risk assessment and reporting. Experts are stern about why this shift is happening. “AI is often a key part of banks’ fraud prevention strategies,” observes Satish Lalchand of Deloitte, noting that banks are building sophisticated analytics to “detect unknown cases of known fraud schemes” and to find how criminals are “changing to evade detection”. In other words, AI’s ability to spot unknown patterns, not just repeat past ones, is critical as fraudsters get creative.

Crucially, better fraud detection with AI also translates to tangible business benefits. By catching more fraud (and doing so earlier), AI can prevent losses before they occur. And by cutting false positives, AI helps ensure genuine customers aren’t wrongly turned away. A case in point: online ticket marketplace TickPick integrated an AI-driven fraud screening tool (Riskified’s “Adaptive Checkout”) to augment its legacy checks. In just the first three months, TickPick was able to approve up to $3 million in additional legitimate orders that it would have otherwise declined as fraud. Those were real sales reclaimed by smarter detection, illustrating how AI can unlock revenue that rigid rules would have unintentionally suppressed. AI models, with their real-time learning, can also evaluate more data points (IP, device, behavior, etc.) per transaction than any single rule set, making them both faster and more nuanced in decisioning. It’s no surprise regulators are encouraged by these advancements; even the UK’s Financial Conduct Authority has noted AI’s potential to “improve fraud detection and AML controls (faster detection of illicit activity, reduced false positives, etc.)” in financial services.

Regulatory Pressure and Industry Expectations

Regulators and industry watchdogs are increasingly expecting PSPs and banks to leverage cutting-edge techniques like AI, not only to improve compliance outcomes, but also to keep pace with criminals. Organized fraud rings certainly aren’t standing still; the European Banking Authority warns that criminals are “increasingly using AI to automate laundering schemes, forge documents, and evade detection”. In this cat-and-mouse game, authorities recognize that financial institutions must innovate or else risk being outpaced by tech-savvy criminals. This is driving a new tone from regulators: one that encourages responsible AI adoption. The U.S. Financial Crimes Enforcement Network (FinCEN), for instance, explicitly stated that private-sector innovation, whether through new technologies or novel techniques, “can help financial institutions enhance their AML compliance programs, and contribute to more effective and efficient...reporting”. In other words, regulators see AI not as a buzzword, but as a means to better outcomes like catching more fraud and streamlining compliance.

At the same time, regulatory bodies are piling on pressure for results. Consider the UK’s Payment Systems Regulator, which in 2024 introduced a mandatory reimbursement scheme for authorized push payment fraud. It requires PSPs to reimburse victims of certain scams, effectively putting the financial onus on providers to prevent fraud in the first place. Facing such mandates, PSPs have a strong incentive to deploy the most effective fraud defenses available, namely, modern AI-driven systems that can dramatically reduce successful scams. More broadly, industry surveys reveal that executives view AI as essential to staying competitive. Over 62% of banking leaders told American Banker that fraud detection is a top-priority use case for intelligent automation in the next year. Fraud prevention has become a technological arms race, and those still relying on manual rules risk falling behind.

Regulators are also keen on a balanced approach: they emphasize that AI shouldn’t be a black box that overrides risk judgment, but rather a tool to augment a firm’s risk-based approach. Many agencies endorse a “human in the loop” philosophy for AI in compliance, ensuring that automated decisions can be explained and overseen by experts. This means that while regulators want to see firms innovate with AI, they also expect proper controls, transparency, and human judgment applied to these systems. In practice, that aligns perfectly with a hybrid AI + rules strategy. By combining AI with rule-based controls, PSPs can satisfy regulators that their programs are both cutting-edge and accountable to defined risk policies.

Combining AI with Risk-Based Rules: Best of Both Worlds

Rather than choosing AI versus rules, savvy payment processors are finding that the strongest fraud prevention strategy is AI plus rules. Each approach complements the other’s weaknesses. AI provides adaptability, pattern recognition, and predictive power, while rules provide clear parameters, domain expertise, and ease of interpretation. When deployed together, they create a layered defense far superior to either method alone.

In a combined model, machine-learning algorithms might monitor transactions to flag anomalous behaviors or score each transaction’s risk in real time. At the same time, a rules engine enforces business-specific policies and hard limits (for example, blocking prohibited jurisdictions or flagging transactions that violate known regulatory thresholds). The AI can catch the unknown unknowns, novel fraud patterns or subtle correlations that rules didn’t anticipate, and feed those insights to analysts. The rules, meanwhile, act as guardrails and embody the institution’s risk appetite (ensuring, say, that anything above a certain risk score gets routed for review, or that known red-flag conditions are never ignored). This synergy significantly cuts down false positives and false negatives. Indeed, Flagright’s experience building an AI-native compliance platform shows that marrying the two approaches yields powerful results: AI algorithms uncover complex suspicious patterns that evaded rules, improving risk detection and reducing false alerts, while the risk-based rules ensure the AI’s outputs align with compliance policies. The end result is faster, more accurate decision-making without sacrificing human oversight.

Equally important, a hybrid approach addresses the need for explainability. Pure machine learning models can sometimes be opaque (“black boxes”), which is problematic for compliance officers who must explain why a transaction was flagged to regulators or customers. By incorporating rule-based logic, firms can maintain transparent criteria in the loop. For example, an AI model might assign a high risk score to a transaction, but a complementary rule can be set that, if the score exceeds X and the transaction triggers certain known risk factors, it’s flagged with a clear rationale. Many modern platforms facilitate this by allowing AI and rules to work hand-in-hand. Flagright’s platform, for instance, includes both a no-code rules engine and an AI forensics module. Compliance teams can easily configure custom rules and thresholds via a drag-and-drop interface, while behind the scenes Flagright’s AI agents analyze data to suggest optimal threshold values and detect hidden patterns. The machine learning might, for example, recommend loosening a particular rule’s threshold after observing it causes too many false positives, but crucially, the human compliance officer has final say, adjusting the rule as needed to balance sensitivity and precision. This keeps the “human in the loop” and ensures the institution’s risk experts remain in control of the system’s tuning.

By combining AI with risk-based rules, payment processors can achieve both adaptability and stability. The AI continually learns and flags new threats (so the system stays one step ahead of evolving fraud), while the rules provide a stable framework that can be explained to auditors and adjusted as business needs change. This hybrid model has clear payoffs: fewer false positives, quicker investigative workflows, and stronger fraud catch rates, all while maintaining compliance with regulatory expectations for rigor and explainability. It’s telling that in the market, solutions offering this balance are gaining traction. Flagright, as a case in point, delivers an AI-native transaction monitoring system with a high-performance rules engine in one package. Its AI Forensics deploys specialized ML agents to reduce analyst workloads and improve decision quality, and its no-code rules builder lets teams configure scenarios with “unparalleled agility” to respond to emerging risks. In practice, this means a compliance team can instantly tweak a rule or add a new scenario when a new fraud trend emerges, and simultaneously rely on the AI to catch anomalous signals that aren’t covered by any rule. Such flexibility and intelligence are becoming must-haves for PSPs operating in the digital economy.

Conclusion

The debate between AI and rules-based screening is quickly being settled in favor of a blended approach. Static rules alone are too brittle in the face of adaptive criminals, and AI alone without oversight can be too opaque or misaligned with policy. But together, they provide a formidable defense that keeps fraud losses low and false positives manageable. For payment processors, which often operate on thin margins and high volumes, this can be game-changing, enabling them to spot fraud faster, reduce friction for legitimate customers, reclaim revenue otherwise lost to false declines, and streamline compliance operations. It’s no coincidence that banks and fintechs worldwide are investing heavily in AI-driven fraud detection while simultaneously upgrading their rule engines for more flexibility. The message from both regulators and industry leaders is clear: leveraging AI’s brains and rules’ guardrails in concert is the way to stay ahead of financial crime. Payment processors that embrace this hybrid model will not only better protect themselves and their customers, but also meet the rising expectations of regulators for robust, risk-based fraud management. In the end, combining AI with risk-based logic isn’t just an innovation, it’s fast becoming a necessity for those who want to remain a step ahead of the fraudsters.

.svg)