Imagine asking your AI vendor why a customer was flagged, only to be told: “That’s just how our proprietary algorithms work.” This canned response signals a dangerous black-box approach where the decision-making logic is hidden from you. Such opacity is more common than you might think among compliance technology providers. Many legacy AML/fraud vendors tout “AI” but operate as closed systems, leaving compliance teams unable to explain or trust how alerts are generated. This lack of explainability is a direct compliance risk. The Financial Action Task Force (FATF) explicitly warns that AI “must not be a ‘black box’ that financial institutions cannot explain in reviews to supervisors or courts.” Opaque models make it difficult for regulated entities to show how decisions are made or why a customer was flagged. In other words, if your vendor’s AI is a mystery, your institution bears the burden when auditors or regulators start asking questions.

Regulators today expect financial institutions to understand and justify their AI-driven decisions. U.S. bank supervisors treat AI/ML models as subject to the same rigorous model risk management as any other model, requiring clear documentation, validation, and an ability to explain how outputs are produced. If a bank, for instance, can’t explain its transaction monitoring alerts because the vendor won’t reveal the logic, the bank, not the vendor, will be held accountable. Hence, outsourcing your intelligence doesn’t outsource your liability. If a vendor’s model behaves inappropriately or produces biased outcomes, the financial institution using it is still on the hook. You can’t blame a “secret algorithm” in front of regulators.

Compliance Risks of Outsourcing AI to Unaccountable Vendors

Relying on a black-box vendor for AML and fraud detection is same as flying blind in a regulated storm. Compliance leaders must remember that regulators and laws don’t care if your AI was built in-house or by a third party, you must evidence compliance either way. The problem with opaque, third-party AI is twofold: lack of transparency and lack of control. Without transparency, you may struggle to produce the audit trails and reasoning that regulators demand. For instance, the EU’s landmark AI Act (effective 2024) mandates that “high-risk” AI systems (a category that includes AML models) provide traceable, explainable decision logs and allow human interpretation of outputs. Similarly, global regulators from the FATF to U.S. Treasury emphasize that AI used in compliance must have an audit trail and clear logic, not just spit out risk scores with no context. A vendor that refuses to divulge how its “secret sauce” works puts your institution in jeopardy of non-compliance with these expectations.

Lack of control is the other huge risk. By “building your AI strategy on someone else’s intelligence,” you effectively cede control over how risk is detected and managed in your organization. What happens when the vendor’s model starts under-performing? We’ve seen that AI systems can degrade over time; if data drifts or new criminal patterns emerge, a static black-box model might make worse decisions as months go by. If you’ve outsourced this intelligence, you might not even realize your detection rates are dropping until it’s too late. And when you do, you’re dependent on the vendor’s timeline (and willingness) to update or retrain their model. Innovation grinds to the vendor’s pace. You still need to test the outputs, ask the right questions up front, and build oversight into the process. Many FIs mistakenly assume that if a third-party tool is “AI-powered” and comes with compliance assurances, they can take a hands-off approach, a dangerous misconception. In reality, you must actively govern any outsourced AI as if it were your own internal system.

There’s also the issue of blind spots and bias. A closed vendor model is often trained on who-knows-what data; if it misclassifies customers or exhibits bias, your team might never know until a scandal erupts. And if regulators or auditors probe a particularly odd decision (say, why was Customer X treated as high risk?), responding with “our vendor’s algorithm decided so, and we have no further insight” is a nightmare scenario. In the end, you’re accountable for every alert cleared or filed. Without transparency, you’re effectively betting your banking license on a black box. No wonder compliance leaders at top-tier institutions are increasingly skeptical of vendor AI that can’t be explained. They’ve seen this movie before: big promises, little transparency, and painful conversations with examiners.

Why Top-Tier Institutions Demand Transparency and Control

The market is undergoing a clear shift: banks, fintechs, crypto & stablecoin companies are demanding control over their risk models. According to industry research, modern AML platforms are expected to do more than churn out alerts, institutions now require solutions that let them customize detection rules and provide transparent, explainable decision-making to regulators. In other words, it’s no longer acceptable for a vendor to say “just trust our AI.” Buyers want the ability to peek under the hood and turn the dials themselves. They know their business and risk profile best, and they want AI tools that can adapt to sector-specific risks and be tuned to their unique needs.

We can see this demand reflected in how newer players pitch their products, and the ability to explain with conviction is a prerequisite for many buyers. They want to know: can our analysts and auditors see why the AI flagged something? Can we adjust the system if it’s too sensitive or not sensitive enough? Can we own the logic instead of being handcuffed by a vendor’s one-size-fits-all model?

Crucially, control doesn’t mean sacrificing capability. The best solutions offer both high-tech AI and user configurability. The goal: you shouldn’t have to choose between flexibility and performance or between innovation and compliance. Top-tier compliance teams insist on having it both ways: powerful AI, but on their own terms. They’ve learned that AI in compliance is not a magic box you plug in; it’s a living part of your infrastructure that you need to understand and direct. Vendors who don’t provide that transparency and flexibility are increasingly getting pushback. Why would a FI tie its fortunes to an inscrutable system, especially when regulators worldwide are raising the bar on AI governance?

Treat AI as Infrastructure, Not Just Another Feature

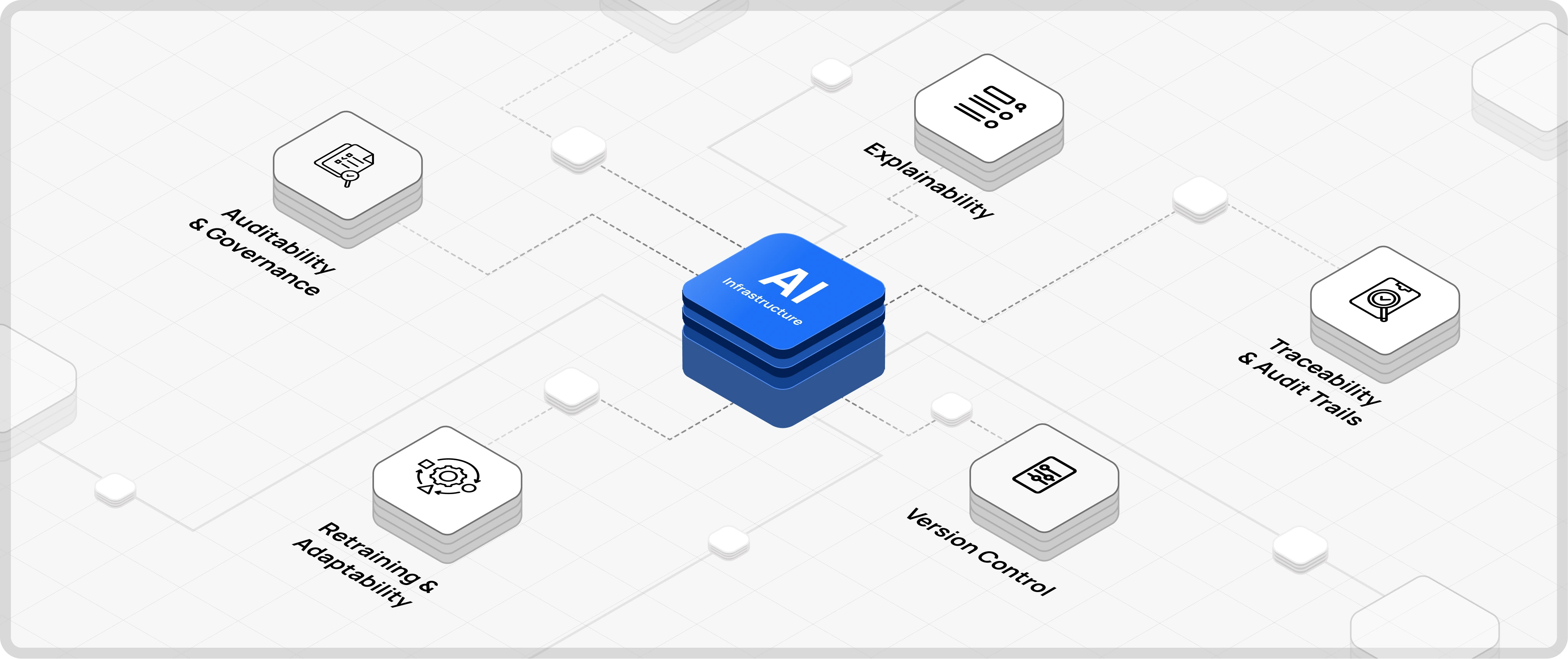

A key mindset shift is happening in the industry: AI is now becoming a core infrastructure for compliance. And like any infrastructure, it requires governance, maintenance, and oversight. You wouldn’t deploy a new transaction monitoring engine without knowing how to tune it, log its outputs, and control changes; AI is no different. In practice, treating AI as infrastructure means insisting on a few non-negotiables: traceability, version control, explainability, retraining capability, and auditability.

Let’s break those down:

- Traceability & Audit Trails: Every decision the AI makes should be recorded and traceable. You need to know which rules or model version triggered an alert and on the basis of what data. Maintaining logs of how decisions are made is essential for regulatory reviews and internal audits. Under emerging laws like the EU AI Act, providers must retain decision logs and enable traceable audit trails for high-risk AI systems. If an AI flagged a transaction, you should be able to trace why step by step.

- Version Control: Your compliance team should be able to track changes to AI models or detection rules over time. When the model is updated or thresholds adjusted, it must be versioned and documented. This way, if something goes awry (say false positives spike in October), you can pinpoint if a model update in September might be the culprit. Version control of AI policies also means you can roll back if needed, or audit how the system’s behavior has evolved.

- Explainability: Any AI-driven alert or score needs to come with an explanation that humans can understand. This doesn’t mean dumbing it down to “AI magic”; it means providing the key factors and reasoning in plain language. If a customer was flagged high-risk, was it due to a sudden burst of transactions to a new country, an outlier behavior against their profile, a name match to a sanctions list? Compliance officers and even customers (under certain regulations) have a right to know the why. Explainable AI is crucial for building trust, both with internal stakeholders and regulators.

- Retraining & Adaptability: Financial crime patterns evolve quickly, your AI needs to as well. Treating AI as infrastructure means setting up a pipeline for continuous improvement: feeding back new data and analyst feedback to retrain models so they don’t grow stale. You should ask vendors how their models learn over time and how often they’re updated. If the answer is “we update the model once a year” or “that’s proprietary,” consider it a red flag. Without regular tuning, models can drift off-course, generating more false positives or missing new typologies. An AI strategy built to last will include a retraining loop (with proper validation) to incorporate evolving risks and institutional knowledge.

- Auditability & Governance: You must be able to audit the AI’s decisions and the overall model performance at any time. This goes beyond individual decision traceability; it means having governance controls around the AI. For example, who reviewed and approved the model before deployment? Is the model’s documentation accessible and up to date? Can you produce evidence of the model’s validation and its limitations? Regulators increasingly expect that AI models be subject to the same controls and testing as any other high-risk model, including bias testing, performance monitoring, and documented governance review. In practice, a vendor should provide you the means to audit their AI, whether through built-in reports or by allowing external validation. If a vendor won’t let you see under the hood for an audit, that’s a huge warning sign.

When AI is treated as core infrastructure, it is embedded in your compliance workflow with full oversight, not bolted on as an inscrutable add-on. This mindset forces vendors and in-house teams alike to prioritize things like human-in-the-loop controls, fallback mechanisms, and rigorous documentation. For example, having the ability for a human analyst to override or review AI decisions is essential for safety and accountability. Similarly, strong infrastructure-minded AI solutions will have mechanisms to prevent “drift” and ensure consistent outputs, just as you’d expect for any mission critical software in a FI.

Flagright’s Transparent AI Approach: A Case Study

How can these principles be applied in practice? Let’s look at an example: Flagright, an AI-native transaction monitoring & AML compliance solution that deliberately built its AI systems with transparency and oversight from day one. Flagright’s approach stands in stark contrast to the black-box paradigm. Rather than a generic, off-the-shelf model hidden behind an API, Flagright’s AI algorithm is a purpose-built AML compliance AI that integrates directly into the compliance workflow. It acts as an extension of the compliance team, and here’s what that looks like in concrete terms:

- Every AI decision is explainable and logged: Flagright ensures full transparency and auditability for each action the AI takes. All decisions by the AI agents come with a written narrative explaining why an alert was cleared or escalated. For example, if the AI auto-clears a sanctions screening alert, the system might log a note: “Auto-cleared by AI: middle name and date of birth did not match the watchlist entry, indicating a likely false positive.” These explanations are stored as part of the case, so auditors or investigators can review exactly what the AI did and why. Nothing happens in a black hole; every automated clearance is recorded, timestamped, and tied to the AI agent’s ID, providing a complete audit trail. This level of detail gives compliance officers confidence that the AI isn’t doing anything “in the dark” or beyond oversight.

- Human oversight and configurable control: Flagright’s AI was built with a compliance first mindset, meaning the human team remains firmly in control. Compliance teams can define parameters for the AI agent; for instance, deciding what types of alerts it can auto-resolve versus which should always go to a human. If the organization is only comfortable auto-clearing low-risk alerts (like obvious false name matches) but wants a human to review anything slightly complex, they can set that rule. The AI effectively operates under management’s risk appetite. This is vastly different from a vendor telling you “the model will do XYZ and you can’t change it.” Here, the institution sets the guardrails. Moreover, Flagright’s platform allows an easy review of AI-cleared alerts. Compliance officers can randomly sample what the AI has done, verify the correctness of its decisions, and provide feedback which the AI uses to continuously improve. It’s a closed-loop of accountability: humans train the AI, the AI assists the humans, and humans oversee the AI.

- No hidden logic or vendor lock-in: Because Flagright’s system was designed as AI-native and transparent, the institution has full visibility into how detection logic works. There are no secret scoring factors that the client isn’t aware of. Every risk score or alert comes with the contributing factors identified. Flagright’s architecture provides a “natural-language explanation and a visual audit trail” for each decision, and it lets institutions see and even tailor the detection rules or model configurations. This means no vendor lock-in via mystery algorithms; if a compliance team wants to adjust how the AI prioritizes certain risk factors, they can do so in collaboration with Flagright. It’s AI as a collaborative tool, not a dictate. As a result, when regulators ask “how does your system decide something is suspicious?”, the FI can pull back the curtain confidently. Flagright also enables generating audit-ready reports that justify decisions, aligning with what regulators expect to see.

- Alignment with regulatory expectations: By building transparency and traceability in, Flagright’s AI Forensics inherently meets the growing expectations of regulators and auditors. Recall those requirements we discussed; audit trails, version control, explainability are all baked into the platform. Flagright’s team understood that in compliance, an AI that can’t explain itself is a non-starter. The platform was therefore designed to make every model decision interpretable, every change trackable, and every outcome defensible. This keeps regulators and also gives internal risk committees and model validation teams peace of mind. In practice, Flagright’s clients have used the AI Forensics logs and narratives during audits to show regulators exactly how false positives are being reduced without sacrificing oversight. That’s the ideal scenario: innovation with accountability.

The Flagright example illustrates that it’s entirely possible to have cutting-edge AI in AML and fraud prevention while still maintaining control and clarity. It dispels the myth that “AI = black box.” When engineered correctly, AI can function as a glass box; you see everything it’s doing and can become an infrastructure layer that your team manages much like your rules engine. Flagright chose that path, and now Flagright’s clients are able to deploy AI agents that slash false positives by over 90% and remain compliant and audit-ready. The takeaway for compliance leaders is that you don’t have to accept opacity in order to leverage AI. Transparency and performance can go hand in hand.

Checklist: How to Evaluate AML/Fraud AI Vendors

When you’re assessing AI solutions for anti-financial crime, keep a critical eye. Use the following checklist to ensure any vendor’s offering meets the high standards of both innovation and compliance:

- Explainability: Can the vendor clearly explain how their AI makes decisions in plain language? Do they provide a rationale for each alert (e.g., which factors led to a flag) that your investigators and regulators could understand? If all you get is “trust us, it’s AI,” run the other way.

- Transparency & Audit Trails: Does the system log every decision and provide an audit trail? You should be able to trace why a particular alert was generated, what data was considered, and how the risk score was determined. Auditability is key; ask the vendor to demonstrate how you’d audit the AI’s decisions after the fact.

- Configurability & Control: Check if you can tune or configure the AI to suit your risk appetite. Can you adjust thresholds, risk scoring logic, or what the AI is allowed to auto-resolve? Top institutions demand the ability to customize detection rules and models rather than accepting a one-size-fits-all black box. Your team should retain control over how the AI operates in your environment.

- Versioning & Updates: How does the vendor manage model updates? Ensure they have version control for their AI models and policies, every change should be documented and roll-back capable. Also clarify the retraining process: Is the model updated continuously with new data or only periodically? If the vendor can’t articulate how they keep the AI fresh (or if they won’t let you know when it changes), that’s a problem. Remember, models can drift and lose accuracy if not regularly updated.

- Vendor Transparency & Support: Will the vendor give you access to documentation on the model’s design, assumptions, and known limitations? Do you have rights to audit the vendor’s AI, or at least get detailed reports about its performance? Also, test their willingness to answer tough questions: Ask how the model handles a specific scenario or why it made a past decision. If the answer is evasive or “that’s proprietary,” consider it a red flag for longterm partnership.

- Regulatory Alignment: Ask the vendor how their solution helps you meet regulatory requirements. Do they have features for model validation, bias testing, and human-in-the-loop review? For example, can the AI’s recommendations be overridden and are those overrides logged? A strong vendor should speak the language of compliance and model risk management, citing things like audit logs, governance, and alignment with guidelines (e.g., FATF’s expectations or OCC guidance on AI models). This shows they understand both the tech and the compliance context.

- Effectiveness with Accountability: Finally, require evidence that the AI actually improves outcomes without sacrificing accountability. A credible vendor will share metrics like false positive reduction rates, case studies, or client references, and will emphasize how they maintain explainability alongside those gains. Beware of those offering miraculous “95% detection with zero effort” claims with no mention of explainability or auditability.

Using this checklist, you can cut through the hype and identify whether an AI vendor is a true partner or just selling opaque promises. The best providers will welcome these questions; they’ll be eager to show how their system is configurable, transparent, and built for compliance first. Vendors who get defensive or cannot demonstrate these qualities likely haven’t designed their solutions for the stringent needs of regulated institutions.

Conclusion: Own Your AI (Intelligence) Strategy

In the rapidly evolving world of financial crime compliance, AI will indeed be a game-changer, but only if implemented with care, transparency, and control. As compliance, AML, and risk leaders, you should approach AI not as a mystical outsource to “someone else’s intelligence,” but as a critical piece of your own organization’s strategic infrastructure. That means demanding clarity at every turn: How does it work? Why did it decide that? What if we need to change something?

Building your AI strategy on someone else’s black-box model is a recipe for regulatory trouble and strategic stagnation. You might get short-term convenience, but at the cost of long-term agility and accountability. The better path, as forward-looking institutions are discovering, is to insist on AI solutions that are open and accountable. Whether you partner with a vendor like Flagright that shares this philosophy, or develop in-house, the guiding principle is the same: you retain the intelligence and insight into your risk models. AI should empower your compliance team, not obscure their vision.

As you plan for the next wave of AML and fraud tech, remember this mantra: transparency over opacity, collaboration over blind outsourcing. The institutions that heed this will unlock the real competitive advantage of AI, which comes from combining machine efficiency with human judgment in a governed, visible way. Don’t let anyone convince you that you must sacrifice oversight for innovation. The future of compliance AI belongs to those who refuse to build their strategy on “someone else’s intelligence” and instead harness AI as an accountable, flexible asset within their own enterprise. In this new era, owning your AI strategy, in every sense of the word “own” will be the ultimate differentiator in safeguarding your business and staying ahead of financial crime.

.svg)